import os, glob, time, random, argparse, cv2, numpy as np,PIL

from matplotlib import pyplot as plt

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_splitpath="E:/python_projects/whale_dectection/trainimage"

# find .jpg and JPEG files in subdirectories

for subdir in os.listdir(path):

# find .jpg and JPEG files in subdirectories

for filename in glob.glob(os.path.join(path, subdir, '*.jpg')):

# read image

img = cv2.imread(filename)

# convert to RGB

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# pring their dimensions

#print(img.shape)

# reshape all images to a common size

img = cv2.resize(img, (224, 224))

# save image

cv2.imwrite(filename, img)

print(f" {filename} saved") E:/python_projects/whale_dectection/trainimage\beluga whale\beluga1.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga2.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga3.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga4.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga5.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga6.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga7.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga8.jpg saved

E:/python_projects/whale_dectection/trainimage\beluga whale\beluga9.jpg saved

E:/python_projects/whale_dectection/trainimage\blue whale\blue1.JPG saved

E:/python_projects/whale_dectection/trainimage\blue whale\blue2.JPG saved

E:/python_projects/whale_dectection/trainimage\bowhead whale\bowhead1.jpg saved

E:/python_projects/whale_dectection/trainimage\fin whale\finn1.JPG saved

E:/python_projects/whale_dectection/trainimage\fin whale\finn2.JPG saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 1.jpg saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 2.jpg saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 3.jpg saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 4.jpg saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 5.jpg saved

E:/python_projects/whale_dectection/trainimage\ganges dolphin\ganges 6.jpg saved

E:/python_projects/whale_dectection/trainimage\gray whale\grey1.JPG saved

E:/python_projects/whale_dectection/trainimage\gray whale\grey2.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback1.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback2.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback3.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback4.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback5.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback6.JPG saved

E:/python_projects/whale_dectection/trainimage\humpback whale\humpback7.JPG saved

E:/python_projects/whale_dectection/trainimage\indoPacific dolphin\indopacific dolphin1.jpg saved

E:/python_projects/whale_dectection/trainimage\longfinnned whale\longfin1.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river1.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river10.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river11.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river12.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river2.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river3.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river4.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river5.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river6.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river7.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river8.JPG saved

E:/python_projects/whale_dectection/trainimage\river dolphin\river9.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm1.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm2.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm3.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm4.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm5.JPG saved

E:/python_projects/whale_dectection/trainimage\sperm whale\sperm6.JPG saved# make tensorflow model

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.applications.mobilenet_v2 import MobileNetV2

from tensorflow.keras.layers import Dropout, Flatten, Dense

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.applications.mobilenet_v2 import preprocess_input

from tensorflow.keras.preprocessing.image import img_to_array

import numpy as np

import pandas as pd

import os

import tensorflow as tf

import keras

from keras.models import Sequential

from keras.layers import Dense, Dropout

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

# download VGG19 model

from keras.applications.vgg19 import VGG19# subdirectories as classelabel

classes = os.listdir(path)

species_to_label={}

for i, species in enumerate(classes):

species_to_label[species]=i

species_to_label

label_to_species={value:key for key,value in species_to_label.items()}

label_to_species{0: 'beluga whale',

1: 'blue whale',

2: 'bowhead whale',

3: 'fin whale',

4: 'ganges dolphin',

5: 'gray whale',

6: 'humpback whale',

7: 'indoPacific dolphin',

8: 'longfinnned whale',

9: 'river dolphin',

10: 'sperm whale'}df=[]

# make dataframe with path and label

for subdir in os.listdir(path):

for filename in glob.glob(os.path.join(path, subdir, '*.jpg')):

df.append([filename, subdir])

df=pd.DataFrame(df,columns=['path','label'])

df.head()| path | label | |

|---|---|---|

| 0 | E:/python_projects/whale_dectection/trainimage... | beluga whale |

| 1 | E:/python_projects/whale_dectection/trainimage... | beluga whale |

| 2 | E:/python_projects/whale_dectection/trainimage... | beluga whale |

| 3 | E:/python_projects/whale_dectection/trainimage... | beluga whale |

| 4 | E:/python_projects/whale_dectection/trainimage... | beluga whale |

def preprocess_image(path):

img=plt.imread(path)

im=cv2.resize(img,(224,224))

return im/255.0

# preprocess images

img=preprocess_image(df.loc[15,'path'])

label=df.loc[15,'label']

plt.imshow(img)

plt.title(label)Text(0.5, 1.0, 'ganges dolphin')

n_examples=len(df)

n_classes=len(classes)

# initialize X and Y

X=np.zeros((n_examples,224,224,3),dtype=np.float32)

Y=np.zeros((n_examples,n_classes),dtype=np.float32)

for i, (path,label) in enumerate(df.values):

X[i]=preprocess_image(path)

Y[i,species_to_label[label]]=1

print(X.shape)

print(Y.shape)(49, 224, 224, 3)

(49, 11)# split data into train and test

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, random_state=42)# make model

model = Sequential()

base_model=VGG19(weights='imagenet',include_top=False,input_shape=(224,224,3),pooling='avg')

# add new layers

model.add(base_model)

model.add(Dense(1000, activation='relu', kernel_initializer='he_uniform'))

model.add(Dense(128, activation='relu', kernel_initializer='he_uniform'))

model.add(Dense(n_classes,activation='softmax'))

model.summary()Model: "sequential_6"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

vgg19 (Functional) (None, 512) 20024384

_________________________________________________________________

dense_17 (Dense) (None, 1000) 513000

_________________________________________________________________

dense_18 (Dense) (None, 128) 128128

_________________________________________________________________

dense_19 (Dense) (None, 11) 1419

=================================================================

Total params: 20,666,931

Trainable params: 20,666,931

Non-trainable params: 0

_________________________________________________________________batch_size = 16

epochs = 30

learning_rate = 1e-3

# compile model

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

# train model

history=model.fit(X_train,Y_train,epochs=epochs, batch_size=batch_size,validation_data=(X_test,Y_test))Epoch 1/30

3/3 [==============================] - 82s 24s/step - loss: 135.5160 - accuracy: 0.3077 - val_loss: 3.4185 - val_accuracy: 0.3000

Epoch 2/30

3/3 [==============================] - 67s 21s/step - loss: 5.0048 - accuracy: 0.0513 - val_loss: 2.5055 - val_accuracy: 0.0000e+00

Epoch 3/30

3/3 [==============================] - 80s 25s/step - loss: 2.1661 - accuracy: 0.2308 - val_loss: 2.8109 - val_accuracy: 0.1000

Epoch 4/30

3/3 [==============================] - 74s 22s/step - loss: 2.0730 - accuracy: 0.2821 - val_loss: 2.5080 - val_accuracy: 0.1000

Epoch 5/30

3/3 [==============================] - 76s 25s/step - loss: 2.0766 - accuracy: 0.3077 - val_loss: 2.6883 - val_accuracy: 0.1000

Epoch 6/30

3/3 [==============================] - 77s 23s/step - loss: 2.2028 - accuracy: 0.2308 - val_loss: 2.1229 - val_accuracy: 0.0000e+00

Epoch 7/30

3/3 [==============================] - 71s 22s/step - loss: 2.0935 - accuracy: 0.3333 - val_loss: 2.3295 - val_accuracy: 0.1000

Epoch 8/30

3/3 [==============================] - 81s 25s/step - loss: 2.0571 - accuracy: 0.2821 - val_loss: 2.5730 - val_accuracy: 0.1000

Epoch 9/30

3/3 [==============================] - 74s 22s/step - loss: 2.0983 - accuracy: 0.2821 - val_loss: 2.5278 - val_accuracy: 0.1000

Epoch 10/30

3/3 [==============================] - 63s 19s/step - loss: 2.0640 - accuracy: 0.2051 - val_loss: 2.3527 - val_accuracy: 0.0000e+00

Epoch 11/30

3/3 [==============================] - 70s 22s/step - loss: 2.0570 - accuracy: 0.2308 - val_loss: 2.4941 - val_accuracy: 0.1000

Epoch 12/30

3/3 [==============================] - 64s 19s/step - loss: 2.0568 - accuracy: 0.3333 - val_loss: 2.3143 - val_accuracy: 0.1000

Epoch 13/30

3/3 [==============================] - 62s 19s/step - loss: 2.1151 - accuracy: 0.2821 - val_loss: 2.2074 - val_accuracy: 0.1000

Epoch 14/30

3/3 [==============================] - 70s 22s/step - loss: 2.1120 - accuracy: 0.2821 - val_loss: 2.4766 - val_accuracy: 0.1000

Epoch 15/30

3/3 [==============================] - 64s 19s/step - loss: 2.0603 - accuracy: 0.2821 - val_loss: 2.5621 - val_accuracy: 0.1000

Epoch 16/30

3/3 [==============================] - 73s 22s/step - loss: 2.0687 - accuracy: 0.2308 - val_loss: 2.4641 - val_accuracy: 0.0000e+00

Epoch 17/30

3/3 [==============================] - 68s 21s/step - loss: 2.0662 - accuracy: 0.2308 - val_loss: 2.3878 - val_accuracy: 0.1000

Epoch 18/30

3/3 [==============================] - 77s 25s/step - loss: 2.0577 - accuracy: 0.2821 - val_loss: 2.4205 - val_accuracy: 0.1000

Epoch 19/30

3/3 [==============================] - 68s 19s/step - loss: 2.0544 - accuracy: 0.2308 - val_loss: 2.3655 - val_accuracy: 0.1000

Epoch 20/30

3/3 [==============================] - 70s 22s/step - loss: 2.0468 - accuracy: 0.3333 - val_loss: 2.3352 - val_accuracy: 0.1000

Epoch 21/30

3/3 [==============================] - 73s 23s/step - loss: 2.0125 - accuracy: 0.2821 - val_loss: 2.7639 - val_accuracy: 0.0000e+00

Epoch 22/30

3/3 [==============================] - 71s 23s/step - loss: 2.0100 - accuracy: 0.2564 - val_loss: 2.3822 - val_accuracy: 0.1000

Epoch 23/30

3/3 [==============================] - 93s 30s/step - loss: 2.0198 - accuracy: 0.3590 - val_loss: 3.3642 - val_accuracy: 0.0000e+00

Epoch 24/30

3/3 [==============================] - 81s 25s/step - loss: 1.9630 - accuracy: 0.3077 - val_loss: 3.6346 - val_accuracy: 0.1000

Epoch 25/30

3/3 [==============================] - 74s 23s/step - loss: 2.0163 - accuracy: 0.3590 - val_loss: 2.3716 - val_accuracy: 0.1000

Epoch 26/30

3/3 [==============================] - 84s 26s/step - loss: 2.3263 - accuracy: 0.2821 - val_loss: 2.1431 - val_accuracy: 0.0000e+00

Epoch 27/30

3/3 [==============================] - 76s 23s/step - loss: 2.1365 - accuracy: 0.1538 - val_loss: 2.4126 - val_accuracy: 0.1000

Epoch 28/30

3/3 [==============================] - 82s 26s/step - loss: 2.1137 - accuracy: 0.2821 - val_loss: 2.4048 - val_accuracy: 0.1000

Epoch 29/30

3/3 [==============================] - 76s 22s/step - loss: 2.0445 - accuracy: 0.2564 - val_loss: 2.3340 - val_accuracy: 0.0000e+00

Epoch 30/30

3/3 [==============================] - 77s 25s/step - loss: 2.0986 - accuracy: 0.2308 - val_loss: 2.3615 - val_accuracy: 0.0000e+00# plot loss and accuracy 2,2 subplot

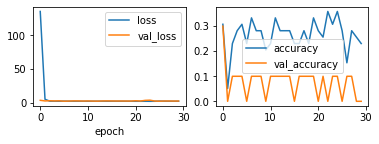

plt.subplot(2,2,1)

plt.plot(history.history['loss'],label='loss')

plt.plot(history.history['val_loss'],label='val_loss')

plt.xlabel('epoch')

plt.legend()

plt.subplot(2,2,2)

plt.plot(history.history['accuracy'],label='accuracy')

plt.plot(history.history['val_accuracy'],label='val_accuracy')

plt.legend()<matplotlib.legend.Legend at 0x2074c092130>

# save model

model.save('model.h5')

# load model

from tensorflow.keras.models import load_model

model=load_model('model.h5')# make predictions

plt.imshow(X_test[1])

plt.title(label_to_species[np.argmax(Y_test[1])]+'_model_prediction_'+label_to_species[np.argmax(model.predict(X_test[1:2]))])

# print test accuracy two decimal places with fsprintf

#print('Test accuracy: %.2f' % (model.evaluate(X_test,Y_test)[1]*100))

# print test accuracy two decimal places with f string

print(f'Test accuracy: {model.evaluate(X_test,Y_test)[1]*100:.3f}')1/1 [==============================] - 3s 3s/step - loss: 2.3615 - accuracy: 0.0000e+00

Test accuracy: 0.000

def model_predict(path):

img=plt.imread(path)

img=cv2.resize(img,(224,224))

img=img/255.0

img=img.reshape(1,224,224,3)

return label_to_species[np.argmax(model.predict(img))]

model_predict('E:/python_projects/whale_dectection/newimage/ganges 5.jpg')'beluga whale'def model_predict(path):

im = np.array([preprocess_image(path)])

prediction = model.predict(im)

# return prediction label and probability

return label_to_species[np.argmax(prediction)], np.max(prediction)

plt.figure(figsize=(10, 10))

for n, path in enumerate(os.listdir('E:/python_projects/whale_dectection/newimage')):

prediction, confidence = model_predict('E:/python_projects/whale_dectection/newimage/' + path)

plt.subplot(2, 2, n+1)

# add title to each subplot

plt.title(f'model predicts {prediction} ({confidence*100:.2f}%)')

plt.imshow(preprocess_image('E:/python_projects/whale_dectection/newimage/' + path))